Anthropic's Model Context Protocol (MCP) offers developers a standardized approach to integrating AI models with external data sources and tools. Designed as a flexible interface layer, MCP simplifies interactions between language models and their surrounding environments, allowing dynamic tool discovery, structured invocation, and secure data access. Instead of managing multiple custom integrations, developers can implement MCP once and connect their AI applications to diverse systems efficiently and securely. This guide provides a clear breakdown of MCP's key concepts, compares it with existing API standards like OpenAPI, and includes practical examples to help you effectively incorporate MCP into your own development workflow.

| Concept | Summary Explanation |

|---|---|

Model Context Protocol (MCP) | MCP is an open standard introduced by Anthropic to unify how AI models (like LLMs) connect with external data sources, tools, and environments, allowing for dynamic two-way communication and streamlined integrations. It's analogous to a "USB-C port for AI." |

Dynamic Tool Discovery and Invocation | Unlike static API standards (e.g., OpenAPI), MCP enables runtime discovery and structured invocation of tools, reducing integration overhead and preventing AI-generated errors ("hallucinations") through structured validation and execution by MCP servers. |

Security and Access Control | MCP integrates strong security practices by using OAuth 2.1 for authentication and centralized permissions management, ensuring AI applications access only authorized data and respecting user-specific contexts. |

Modularity and Ecosystem Growth | MCP allows developers to use a single interface to access a diverse and growing ecosystem of pre-built servers for services like filesystems, version control, communication platforms, databases, and web services, fostering easier scalability. |

Agentic Multi-turn Interaction | MCP supports conversational, multi-turn interactions and real-time context fetching, allowing AI agents to interact dynamically with multiple data sources or tools in a session-oriented manner, making it particularly suitable for complex AI workflows. |

What is MCP and How Can It Be Used?

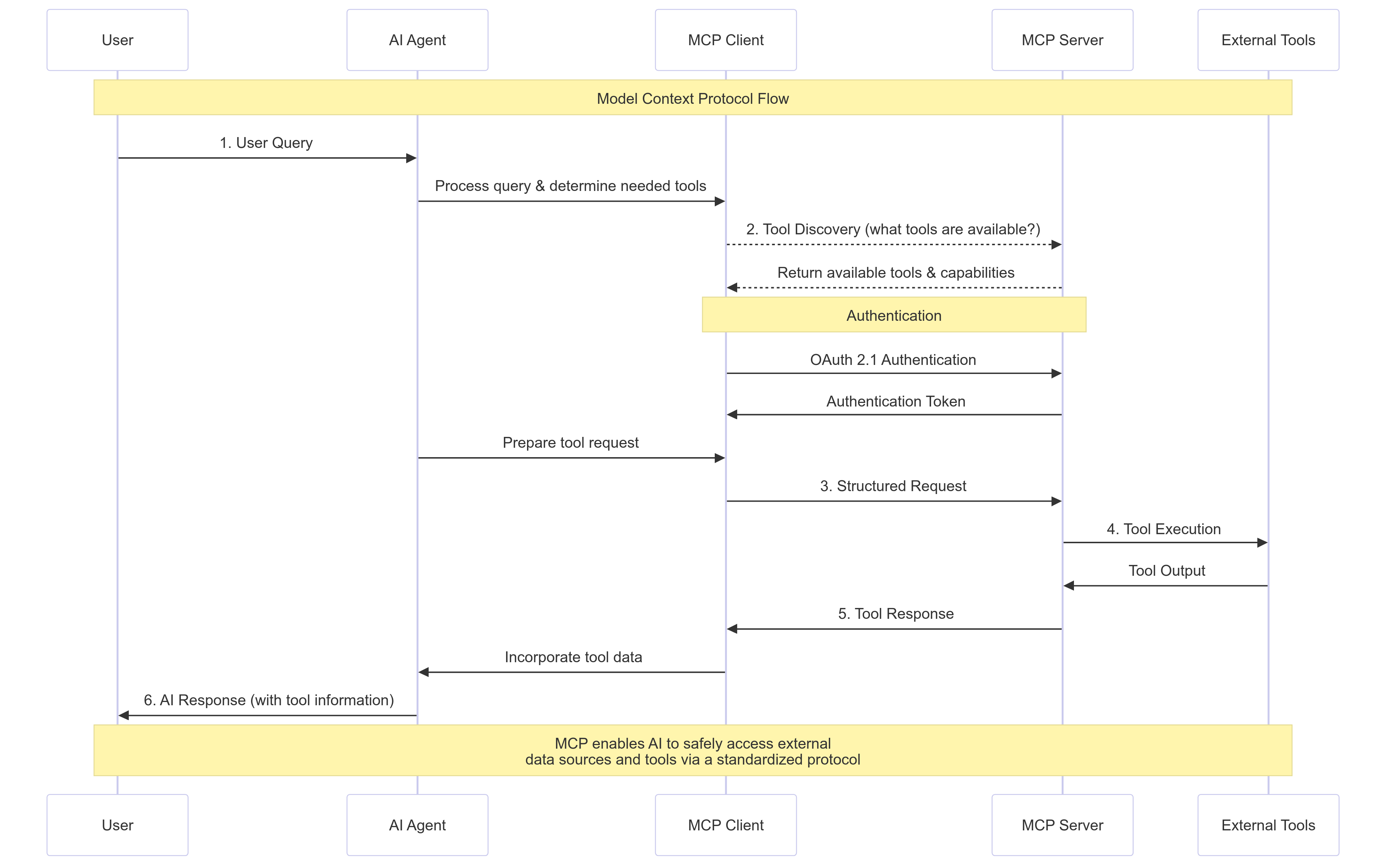

Model Context Protocol (MCP) is an open standard, introduced by Anthropic in late 2024, that defines a unified way to connect AI assistants (LLMs) with external data sources, tools, and environments. Think of MCP as "a USB-C port for AI applications" - a standard interface through which an AI model can access various contexts and resources. The goal is to break LLMs out of their data silos by giving them a consistent method to retrieve relevant information or perform actions in the user's environment.

Key idea: Instead of writing one-off integrations for every tool or database your AI needs to use, you can implement MCP once and gain access to a wide array of resources through a common protocol. MCP enables two-way communication between AI and data sources - an AI application (the MCP client) can query a data source or service (the MCP server) for information or to perform operations, and get structured responses back. Developers can either expose data by implementing an MCP server for a system (e.g. a file system, an API, a database), or consume data by building an MCP client into their AI/LLM application that connects to those servers.

How MCP is used: In practice, MCP is used to build AI agents and context-aware applications. For example, an AI coding assistant can use MCP to connect to a Git repository server to read code, or to a Slack server to fetch conversation context. The MCP client in the assistant can dynamically discover what "tools" or data are available from each connected server and call them as needed during a conversation. This enables multi-turn, context-rich interactions - the AI can ask the server for info, get the result, and incorporate it into its response, possibly repeating over multiple steps. Common use cases include retrieving documents from content repositories, querying databases, running web searches, controlling developer environments, and more.

Learn how Zep added sophisticated memory to the Cursor IDE using the Graphiti temporal graph library and MCP.

Some benefits of MCP include:

- Consistency and Modularity: MCP provides a universal interface for context. An AI app can "plug in" to many data sources via MCP without custom code for each. As Anthropic notes, "developers can now build against a standard protocol... replacing today's fragmented integrations with a more sustainable architecture." This modular approach means you maintain one integration (MCP) instead of dozens.

- Growing Ecosystem of Tools: Anthropic and the community have released many pre-built MCP servers (connectors) for popular services. An LLM can directly leverage these to access files, calendar events, source code, knowledge bases, etc., without the developer reinventing those integrations.

- Flexibility: MCP is model-agnostic and vendor-agnostic - it works with any LLM or AI client that implements the protocol. This gives developers the flexibility to switch out the underlying model or AI service without losing the integrations. It also helps avoid vendor lock-in, since MCP is an open standard (the specification and SDKs are open-sourced under MIT license).

- Security by Design: MCP was designed with security and enterprise use in mind (more on this later). It encourages keeping data within your infrastructure and applying uniform access controls.

In summary, MCP is a bridge between AI and data. By using MCP, you enable your LLM-based applications to fetch relevant, real-time context from the tools and systems you care about, leading to more accurate and useful model responses. For developers, MCP abstracts away the details of each external system behind a consistent protocol, simplifying the process of building rich AI-powered features.

How MCP Differs from OpenAPI

MCP might sound similar to existing API standards (like OpenAPI/Swagger), but there are important differences in scope and design. OpenAPI defines a static RESTful API contract (endpoints, request/response schemas) for traditional services. An LLM can consume an OpenAPI spec (for example, via a plugin) and attempt to call those HTTP endpoints by outputting JSON requests. However, this approach has limitations:

-

Static vs Dynamic: OpenAPI specs are static documents. An LLM must be pre-loaded with the API spec and interpret it correctly to formulate calls. There's no runtime negotiation - the model might misunderstand parts of the spec or not know about updates. By contrast, MCP is dynamic: an MCP client can query an MCP server at runtime to discover available tools and resources. Tools can be added or removed on the server and the client will see the changes. This means the AI always has an up-to-date view of what it can do, without needing a manually updated spec.

-

Structured Invocation & Validation: With a raw OpenAPI approach, the LLM generates a JSON payload and you directly call the API - any mistake in formatting or understanding (e.g. wrong field, missing parameter) can cause errors. MCP introduces a structured invocation layer: when the AI wants to use a tool, it sends a request via the MCP client, and the MCP server validates and executes that request, then returns a structured result. In other words, MCP servers serve as intermediaries that can enforce correct usage (types, required args) and handle errors more gracefully, rather than relying on the LLM to produce perfect API calls. This reduces the chance of the AI "hallucinating" invalid API calls.

-

Unified Security & Policy Management: MCP builds security and access control into the protocol. Each MCP server can uniformly enforce authentication, permissions, and logging for its tools. In an enterprise setting, this centralizes governance - you can manage which data an AI can access via MCP in one place. With OpenAPI, security is left to each individual API (OAuth, keys, etc.), and the AI integrator has to handle those varied methods. MCP standardizes the auth flow (it uses OAuth 2.1 under the hood for granting access - see Security section) and ensures the AI only sees data it's authorized for.

-

Multi-turn "Agentic" Interaction: MCP is designed for conversational or agentic usage. Tools exposed via MCP can be called in the middle of an AI <-> user conversation, and their results fed back into the model's context. The protocol supports streaming and long-lived sessions (often via Server-Sent Events or stdio streams) rather than just stateless HTTP calls. This means an AI agent can have a back-and-forth with a tool, handle intermediate steps, etc., more naturally. OpenAPI, being HTTP-based, is request-response and not inherently session-oriented.

-

Integration Overhead: Implementing OpenAPI for LLM use often requires an extra layer to translate between natural language and API calls. MCP was created specifically to reduce this friction - it effectively is that layer. As one analysis puts it, "MCP adds a dynamic layer that goes beyond static specs," enabling runtime discovery, consistent error handling, and easier multi-tool orchestration. In theory, one could build a clever system to interpret OpenAPI specs for an LLM (and indeed some have proposed this as an alternative), but MCP provides a ready-made standard for this purpose.

In summary, OpenAPI is about defining how to call an API; MCP is about making it easy for AI to use a broad set of tools and data sources reliably. MCP can certainly work with existing APIs - for example, an MCP server could wrap an OpenAPI-defined service - but it adds conventions to better support the AI use-case (tool discovery, unified auth, streaming, multi-turn exchanges, etc.).

Note: There is active debate in the community about MCP vs using OpenAPI/GraphQL directly. Some skeptics feel MCP might add complexity for cases where a simple API call would suffice. Others argue MCP is transformative in simplifying agent integrations across many systems. The consensus so far is that MCP shines in scenarios requiring multiple tools or local integrations, whereas for a single well-documented SaaS API, an OpenAPI-based plugin could be adequate. It's worth evaluating on a case-by-case basis, but MCP aims to be the more scalable, "batteries-included" solution for complex AI workflows.

Programming Language Support

One of MCP's strengths is broad language support through official SDKs and community contributions. Anthropic has open-sourced SDKs and tools for multiple languages, making it easier to implement MCP servers or clients in the environment of your choice:

- Python: Official SDK for building MCP servers/clients in Python. Python was one of the first supported languages and is commonly used for rapid prototyping of MCP servers (as we'll see in examples).

- TypeScript/JavaScript: The MCP specification itself is written in TypeScript, and many reference server implementations are in Node.js. There is a TypeScript SDK and a collection of reference servers published as NPM packages (e.g. @modelcontextprotocol/server-filesystem) for quick use.

- Java and Kotlin: Official Java and Kotlin SDKs exist. The Java SDK is maintained with VMware's Spring AI team and provides integration for Java ecosystems. Kotlin support (maintained with JetBrains) allows building MCP connectors on the JVM with a more idiomatic Kotlin style.

- C# (.NET): Microsoft contributed an official C# SDK, enabling MCP development on .NET platforms. This is particularly useful for Windows desktop applications or enterprise .NET services that want to integrate AI via MCP.

- Swift: There's an official Swift SDK (maintained with Loopwork) targeting Apple platforms. This allows building MCP into iOS or macOS apps (for example, AI assistants on the desktop that can interface with local Mac data).

- Rust: A community-supported but now official Rust SDK provides a high-performance option for MCP. Rust's strong type safety can help in building robust servers, and its async capabilities align well with MCP's streaming interactions.

These official SDKs cover most major languages. They come with helper classes to manage the MCP protocol handshake, define tools, handle events, etc., so you don't have to implement the spec from scratch.

Unofficial/Community Support: Being an open protocol, MCP has sparked interest in other language communities as well. For instance, there are at least two independent Go (Golang) implementations of MCP, allowing integration with Go-based services. A preliminary Ruby gem (mcp-ruby) has been created as an experimental server framework. The Elixir community has built an MCP library (e.g. the Hermes MCP project) to leverage Elixir's concurrency for MCP clients/servers. We also see emerging wrappers or integrations in languages like PHP and Python frameworks for easier adoption (though these may not be full SDKs).

In short, if you're developing with MCP, you likely have an SDK available in your preferred language. Officially supported languages (Python, TS/JS, Java/Kotlin, C#, Swift, Rust) have the most complete tooling and up-to-date support, while unofficial ones show the protocol's reach and can be used with some community guidance. Always check the MCP GitHub organization and documentation for the latest on SDK availability and updates.

Popular MCP Servers and Clients

One of the best ways to understand MCP is by looking at real implementations. The "MCP ecosystem" consists of MCP servers (connectors to specific tools/data) and MCP clients or hosts (AI applications or frameworks that use those servers). Let's explore some popular ones:

Popular MCP Servers (Tools/Connectors): Anthropic has open-sourced a suite of reference MCP servers for many common systems. These servers are typically lightweight services (often you can run them locally or as a small web service) that expose certain capabilities via MCP. Examples include:

- File Systems: A server for local files, which supports secure file operations (list, read, write) with configurable access controls. This allows an AI agent to browse or search your local files if permitted.

- Version Control and Code Repos: Servers for Git, GitHub, and GitLab provide tools to read repository files, search code, review pull requests, etc.. Great for AI pair programmers - the agent can fetch code context or even commit changes via these connectors.

- Communication and Documents: A Slack server offers access to channels and messages (with appropriate authentication), so an AI assistant could answer questions based on Slack history. Similarly, a Google Drive server lets the AI search and retrieve files from a Drive. There are also connectors for services like Google Maps (for location queries) and even a "Memory" server that acts as a knowledge graph for persistent AI memory.

- Databases: Servers for SQL databases like PostgreSQL and SQLite allow read-only querying of database content via natural language. These connectors can inspect DB schemas and let the AI generate SQL under the hood to answer user questions.

- Web and Search: The Brave Search server can perform web searches and return results. A Puppeteer server can drive a headless browser to fetch web page content or even interact with web apps, which is useful for agents needing up-to-date info from websites.

- Miscellaneous: There are time/date utilities (a "Time" server for time zone conversion), a Redis server to interact with key-value stores, a Sentry server to analyze error logs, and many more. The reference list is growing quickly, showcasing how MCP can interface with virtually anything.

In addition to these reference implementations, third-party companies have embraced MCP by writing official servers for their platforms. For example, Apify provides an MCP server to run its web scraping "actors" and fetch data from websites via MCP. Cloudflare built an MCP server to manage resources on its platform (like Workers, KV storage, etc.) through natural language. Database companies like ClickHouse have an MCP server so you can query a ClickHouse DB with an AI agent. Even niche services (marketing analytics, on-chain blockchain data, etc.) have connectors - e.g., Audiense for audience insights, or Bankless for crypto on-chain queries. This growing catalog means if your AI needs a certain tool, there's likely an MCP server for it (or one can be built using the SDKs).

Popular MCP Clients (AI Applications/Agents): On the other side, various AI apps and frameworks are integrating MCP so they can use those servers:

-

Claude Desktop: Anthropic's own Claude assistant (Claude 2, Claude Pro) has a desktop application which includes native support for MCP. In fact, the initial release of MCP was accompanied by Claude Desktop's ability to run local MCP servers and let Claude use them. For example, you can run a filesystem MCP server on your machine and Claude (via Claude Desktop) can then answer questions about your files or code. This was one of the first "host" implementations of MCP and is intended for end-users to seamlessly connect Claude with their data.

-

Agent Frameworks (LangChain/LangGraph): The developer community quickly added MCP support to agent orchestration frameworks. LangChain, a popular Python library for building LLM agents, now provides an MCP adapter that converts any MCP tool into a LangChain-compatible tool. LangGraph, a newer framework for multi-agent workflows, similarly works with MCP. Using these, you can load all tools from one or more MCP servers into an AI reasoning agent with a few lines of code (we'll see an example). This integration means hundreds of MCP-exposed tools can be leveraged inside LangChain/LangGraph without custom wrappers.

-

OpenAI's Agent SDK: OpenAI released an Agents SDK (a toolkit to create and run AI agents, announced in late 2024) - and this too now supports MCP out-of-the-box. There is an official extension package (openai-agents-mcp) that lets OpenAI Agents dynamically use MCP servers alongside native tools. With minimal configuration, you can give a GPT-4 powered agent access to, say, the same filesystem or Slack MCP servers, and it will use them as if they were any other tool. This shows MCP's neutrality: even OpenAI's ecosystem found it useful to adopt the Anthropic-led standard for tool use.

-

Other AI Platforms: Microsoft has integrated MCP into its Copilot Studio (the toolkit for building enterprise copilots/agents). In Copilot Studio, you can "add an action" by connecting to an MCP server - the server's tools then appear as new actions the copilot agent can take. Microsoft highlights that using MCP allows them to apply enterprise controls (network restrictions, data loss prevention, etc.) while giving agents real-time data access. This is a strong sign of MCP's design for not just local apps but also cloud and enterprise scenarios. Similarly, companies like Replit (with their Ghostwriter AI), Sourcegraph (Cody), Codeium, Zed, and others have either integrated or are actively experimenting with MCP in their dev tools - typically to let their AI helpers pull in broader context (like entire codebases, tickets, docs) via MCP connectors.

In short, the MCP server ecosystem covers a wide range of data sources (code, cloud services, files, APIs), and the client ecosystem includes major AI assistants and frameworks. As a developer, you can mix and match: you might run an open-source MCP server for Jira to let an agent fetch ticket info, while using OpenAI's GPT-4 as the agent brain via the OpenAI Agent SDK, all connected through MCP. The pieces are becoming interoperable thanks to the standard protocol.

MCP use on the Desktop vs Service

Is MCP just for local desktop apps? No - MCP was designed to be flexible enough for both local desktop integrations and remote/service-based integrations. However, its initial appeal and design focus skewed towards enabling local or user-controlled environments, which is why you'll see a lot of examples involving desktop apps and IDEs. Let's break it down:

-

Desktop/Local Use: A common scenario is running MCP servers on your local machine and connecting them to a local AI agent. For instance, a developer might run a "filesystem" MCP server on their PC to give an AI coding assistant (running in an IDE or Claude Desktop) access to local files. This is a powerful pattern for personal productivity - it's like running your own "AI plugins" locally. Early adopters like Cursor (an AI-enabled code editor) and IDE plugins find MCP valuable here, because it can safely interface with local tools (git, shell, etc.) in a controlled way. The benefit in local contexts is huge: MCP provides a safe abstraction layer for local operations, which would otherwise be risky to expose directly to an AI. The client-server architecture means if the AI tries something outside allowed bounds, the MCP server can block it (e.g., the filesystem server won't read files outside certain directories if configured).

-

Service/Cloud Use: MCP is not limited to one machine. An MCP server can just as easily run on a remote server or cloud function and be accessed over the network. In fact, recent developments by Cloudflare demonstrate this: they enabled deployment of remote MCP servers on their platform, making them accessible via HTTPS to any authorized client. In this case, the MCP client (the AI app) could be running anywhere (your machine or even as a cloud service itself) and connect to the MCP server over the internet. The experience for the AI is the same - it discovers tools and calls them - but now the data source might be a multi-user web service. This opens the door to machine-to-machine or SaaS scenarios. For example, you could have an AI agent running in the cloud that connects to a "Salesforce" MCP server also running in the cloud to fetch CRM data for users.

Anthropic's intent is for MCP to be ubiquitous - useful in personal desktop apps and in enterprise service architectures. The protocol itself is transport-agnostic (it supports stdio pipes for local processes, as well as network transports like SSE/WebSockets for remote). The security model is adaptable to both (see next section): locally you might trust the user and the OS's security, while remotely you'd enforce authentication for each connection.

It's worth noting that while MCP can do cloud, some argue that if you already have well-defined web APIs (OpenAPI, etc.) for a SaaS product, an AI might use those directly without MCP. But if you anticipate complex agent interactions or multiple tools, MCP can simplify even cloud integrations. We're seeing a trend where cloud products (like the ones in the MCP servers list) roll out MCP endpoints to complement their existing APIs, because it allows any AI client to plug in easily.

Summary: MCP is not intended for desktop use only - it's designed for both local and remote. Initially, many examples (Claude Desktop, IDEs) focused on the local case where MCP solved an immediate need. But with features like Cloudflare's remote connectors and Microsoft's Copilot integration, MCP is proving equally valuable in server-to-server, multi-user contexts. The key difference is just deployment: a local MCP server you "install" on your machine vs a remote MCP server you deploy on a server or cloud. The protocol handles either. Just ensure you have the right authentication in place for remote usage, which leads us to...

Security in MCP: Authentication and User Context

Security is a first-class concern in the Model Context Protocol. Whenever you're giving an AI access to tools or data, you must ensure it only accesses what it's allowed to, and only on behalf of the right user. MCP provides multiple layers to achieve this:

-

OAuth 2.1 for Authentication/Authorization: The MCP specification mandates using OAuth 2.1 protocols (with modern security practices) for granting access to MCP servers. In practical terms, this means if an MCP server connects to a user-specific data source (say a user's Google Drive or Slack workspace), it should implement an OAuth flow where the user logs in and explicitly grants permission for the MCP client (the AI app) to access that resource. For example, a Slack MCP server might have you go through a Slack OAuth screen to get a token that the server uses. OAuth is great for user-by-user consent - the AI never sees the user's password or raw credentials, just an access token with limited scope. The MCP client will typically carry this token or session info and present it to the server on each request (often handled behind the scenes by the SDK). By using OAuth, MCP avoids the insecure practice of sharing API keys or credentials in prompts; instead it uses proven web security patterns.

-

MCP Server Config & Permissions: Each MCP server can enforce fine-grained permissions on the resources it exposes. Since servers are domain-specific, they know what actions make sense and what data to protect. For instance, a Filesystem server can be configured to only allow read access (no writes or deletes) and only within a certain directory - it will refuse any request outside that scope. Similarly, a database server might restrict which tables or columns can be queried by the AI. This built-in policy enforcement means even if the AI tries something malicious or out of bounds, the server is a gatekeeper. Anthropic emphasizes this by design: *"MCP servers handle permissions, access control, and logging uniformly"_ for their domain. Many reference servers come with sensible defaults (e.g., the filesystem server by default might operate in a sandbox directory unless you change it).

-

User Context Isolation: In multi-user scenarios (like an agent serving many users), it's critical to ensure data doesn't leak between users. MCP facilitates this by encouraging a one-user-per-connection model. The host application (AI agent) should initiate separate MCP client connections for each user context and attach user-specific auth tokens to each. The servers then enforce that context. For example, if two users are using an AI assistant that queries an email MCP server, each user's session will have their own OAuth token and the server will only return that user's emails. The MCP host (the agent framework) is responsible for creating and managing these isolated sessions, and not mixing data between them. In the MCP architecture, the host can manage multiple client instances and "enforce security policies and consent requirements" for each. Essentially, the AI software plays a role in routing the right credentials to the right server calls.

-

Transport Security: When MCP is used remotely, it typically runs over secure channels (HTTPS + SSE/WebSockets). If you deploy an MCP server on a network, you should use SSL/TLS to encrypt traffic, just as you would for an API. The Cloudflare MCP deployment, for example, automatically serves the MCP endpoint over HTTPS with their OAuth provider handling token checks. If you run a local MCP server, network eavesdropping is not a big concern (since it might just be localhost or a pipe), but still, the connections are usually not exposed beyond the machine by default.

-

Audit Logging and Governance: MCP servers can (and should) log the requests they handle - e.g., which tool was invoked, by which user/agent, and what the result was. This is useful for debugging and also for security auditing. Because MCP consolidates access through a few servers, it's easier to audit than a scenario where an AI agent is hitting dozens of different APIs all over. Enterprise users can integrate MCP servers with their monitoring and DLP (Data Loss Prevention) systems. Microsoft's integration hints at this: when MCP servers are brought into Copilot Studio, they can leverage "enterprise security and governance controls" like DLP policies and network isolation. So an organization could, say, restrict what data leaves the MCP server or require certain queries to be redacted.

Authentication Methods: In practice, when you set up an MCP server for a protected resource, you have a few options: use an external OAuth provider (like Google, GitHub), use your own identity system, or for local cases, possibly accept a token or API key directly. The MCP spec leans on OAuth 2.1 for standardization. Cloudflare's guide on MCP servers outlines that you can either let the server handle auth itself or integrate with third-party OAuth providers. For example, a GitHub MCP server might redirect the user to GitHub login (OAuth) and get a token which it stores; subsequent tool requests on that server include an Authorization header with that token. If a token expires or is missing, the server can prompt for re-auth. The MCP client and server coordinate this handshake (often the client will open a browser for the user to authenticate, similar to how you'd link a new app to your Google account).

User Identity Propagation: One thing to clarify - MCP itself doesn't define a user identity concept at the protocol level (it doesn't carry a "user id" in every message or anything). It assumes that if user-specific access is needed, the server will require an auth token or cookie that implicitly ties requests to a user's scope. So, as a developer, you must ensure those tokens are managed per user. If you're building an AI service for multiple users, you'll likely maintain a mapping from user -> active MCP sessions/tokens. The MCP client libraries can help manage tokens (often storing them in a session object once acquired).

Example: Imagine an AI chatbot that can fetch calendar events. There is a Calendar MCP server which requires Google OAuth. When User A uses the chatbot for the first time, the bot (MCP client) will redirect them to log in to Google and grant access. After that, an access token for User A's calendar is stored in that MCP client session. Now User A asks "What's on my schedule tomorrow?" - the agent connects to the Calendar MCP server with User A's token and retrieves events. If User B asks the same question, the agent uses User B's separate session/token. The two never mix. If the agent accidentally tries to use User A's session for User B, the server would return the wrong data (or an auth error if tokens are scoped) - thus it's on the agent side to keep them separate. Following the MCP pattern makes this straightforward, since typically you'd establish one MCP ClientSession per user per server.

In summary for security: MCP uses industry-standard auth (OAuth 2.1) to ensure only authorized access, and its architecture encourages strong isolation between different servers and sessions. The host application plays a role in managing user consent and context, and the MCP servers enforce that only allowed actions/data are provided. Always follow best practices: never hard-code user credentials, use the OAuth flows or token injection provided by SDKs, configure least-privilege on your MCP servers (only the minimum functionality needed), and monitor the interactions. When done right, MCP lets you confidently integrate sensitive data with AI, because you have multiple checkpoints to prevent misuse.

Using MCP with LangGraph and OpenAI's Agent SDK

Finally, let's look at some concrete examples of how to use MCP in code. We'll demonstrate two scenarios: one using LangGraph (with LangChain's MCP adapter) and another using OpenAI's Agents SDK with MCP. Both examples assume you have the necessary libraries installed.

Example 1: MCP Tools in a LangGraph Agent

In this example, we'll create a simple MCP server in Python and then connect to it using LangGraph (via the LangChain MCP adapter). Our MCP server will provide two tools: add(a, b) and multiply(a, b) - just to illustrate the flow. Then we'll let an AI agent use these tools to answer a math question.

Step 1: Implement a minimal MCP server (in Python). We use the mcp Python SDK to define a couple of tools and run the server:

[object Object] mcp [object Object] ClientSession, StdioServerParameters

[object Object] mcp.client.stdio [object Object] stdio_client

[object Object] langchain_mcp_adapters.tools [object Object] load_mcp_tools

[object Object] langgraph.prebuilt [object Object] create_react_agent

[object Object] langchain_openai [object Object] ChatOpenAI

[object Object] [object Object] [object Object]():

[object Object]

model = ChatOpenAI(model=[object Object])

[object Object]

server_params = StdioServerParameters(

command=[object Object],

args=[[object Object]] [object Object]

)

[object Object]

[object Object] [object Object] stdio_client(server_params) [object Object] (read, write):

[object Object] [object Object] ClientSession(read, write) [object Object] session:

[object Object] session.initialize() [object Object]

[object Object]

tools = [object Object] load_mcp_tools(session)

[object Object]

agent = create_react_agent(model, tools)

[object Object]

question = [object Object]

result = [object Object] agent.ainvoke({[object Object]: question})

[object Object](result)

[object Object]

asyncio.run(run_agent())

We create a FastMCP server, which under the hood sets up the protocol. The @mcp.tool() decorator registers a function as an MCP tool, with its docstring becoming the tool's description (visible to clients). We then run the server with

transport="stdio", meaning it will communicate via its standard input/output streams (this is convenient for spawning as a subprocess). In practice, you could also run mcp.run(transport="sse") to serve it over an HTTP/SSE interface.

Step 2: Use LangGraph to call the MCP tools. We'll spawn the above server as a subprocess and connect an agent to it:

[object Object]

[object Object]

[object Object]

[object Object]

[object Object] [object Object]

[object Object] [[object Object], [object Object]]

[object Object]

[object Object] [object Object]

[object Object] [[object Object], [object Object], [object Object]]

Let's break down what happens here: We use StdioServerParameters to specify how to start the server (running the Python file). The stdio_client context manager actually launches the process and gives us read, write streams. We then open a ClientSession on those streams, which manages the MCP protocol session. After session.initialize(), the client has discovered the server's capabilities (it knows about add and multiply tools now). We call load_mcp_tools(session) - this is provided by the LangChain MCP adapter and it converts the MCP tools into LangGraph tool objects that an agent can use. Then create_react_agent(model, tools) sets up a reasoning agent with our GPT-4 model and the list of available tools. We invoke the agent with a query; internally, the agent will reason that to answer "(3+5)x12" it should use the add tool (with 3 and 5), then feed that result (8) into the multiply tool with 12. The final result printed should be the correct answer ("96").

This example shows an end-to-end flow: MCP server provides tools -> client loads tools -> agent uses tools -> result. We didn't have to manually program the agent to understand math; the tools did the heavy lifting, coordinated via MCP. While this was a trivial case, the same pattern applies to complex scenarios (e.g. a planning agent using a Google Drive tool to answer a question about a document).

Example 2: MCP with OpenAI's Agent SDK

OpenAI's Agent SDK allows developers to create agents that use OpenAI models (like GPT-4) and a set of tools. With the MCP extension, we can add MCP servers as tool sources for those agents. Here's how you might configure and use an OpenAI Agent with MCP:

Step 1: Configuration.

OpenAI's SDK uses a YAML config file (mcp_agent.config.yaml) to know about available MCP servers (this is one-time setup). For example, to configure a couple of servers (say, the "fetch" web-fetching server and the "filesystem" server), your config might look like:

[object Object]

[object Object]

agent = Agent(

name=[object Object],

instructions=[object Object],

tools=[], [object Object]

mcp_servers=[[object Object], [object Object]] [object Object]

)

[object Object]

result = Runner.run(agent, [object Object]=[object Object], context=RunnerContext()) [object Object]

[object Object](result.output)

A few important details here: We import Agent from agents_mcp (the MCP-enhanced version of the OpenAI Agent SDK). We instantiate it with mcp_servers specifying the servers we want the agent to have (the names correspond to our YAML config keys). The Agent SDK will automatically find mcp_agent.config.yaml (if it's in the working directory or parent) and use it to launch/connect to those servers. We then call Runner.run() to execute the agent with a given user input. The RunnerContext() here is optional but can be used to specify a custom config path or other settings; by default it will discover the YAML config.

In the query, we asked the agent to fetch a webpage and get the title. Under the hood, this is what happens:

-

The agent sees it has a "fetch" tool (from the MCP server) that can fetch URLs. It also has a "filesystem" tool (to read/write files) if needed.

-

It will likely invoke the fetch tool with

"http://example.com"as argument. The fetch MCP server (which was started via npx) will perform the HTTP request and return, say, the HTML content or a summary (depending on how the tool is defined). -

The agent can then parse or use that result. If the fetch server returns structured data (maybe it has a tool that returns the tag), the agent gets the title directly. Otherwise, the agent could save content to a file via the filesystem tool, then maybe search within it, etc. (This showcases how multiple MCP tools can work together.)

-

Finally, the agent responds to the user with the page title.

The key point is that we didn't have to manually integrate HTTP fetching into our agent - by adding the fetch MCP server, we granted that capability. The OpenAI Agent SDK's MCP extension handles discovering the tool and making it seamlessly available as agent.tools. Also note, any __native__ tools (like OpenAI's own calculator or code interpreter, if you had them) can coexist with MCP tools in one agent. The agent doesn't care where a tool comes from; it just sees a unified list of actions it can take.

One more detail: Authentication & context - In this example, our fetch server might not need auth for a public site, and the filesystem server is local. If you used, say, a Slack MCP server here, you would need to have its credentials set. The Agent SDK handles this via an mcp\_agent.secrets.yaml (for tokens/keys) or environment variables. You'd supply your Slack OAuth token or credential in that file so that when the server starts, it can authenticate to Slack's API. Always check the specific server's documentation for what secrets are required (and never hardcode them in code - use the config).

Wrapping Up the Examples

These Python examples illustrate how MCP fits into existing AI development workflows. With just a few lines, we gave our agents superpowers: the LangGraph agent gained new math tools, and the OpenAI agent gained web fetching and file access. In a real-world scenario, you might plug in many more MCP servers - e.g., an email server, a database server, etc. - depending on what your application needs. Thanks to MCP's standardized interface, this scales gracefully: you don't have to rewrite your agent logic for each new integration, the protocol abstracts it for you.

Recap of Tools Used:

-

LangChain MCP Adapters (LangGraph): This allowed loading MCP tools via

load_mcp_tools()and using them in a LangGraph agent. -

OpenAI Agents SDK MCP Extension: This allowed specifying

mcp_serversin the agent and using a YAML config to spin them up.

Both approaches showcase the "plug-and-play" nature of MCP in agent systems. As the MCP ecosystem grows, developers can increasingly rely on off-the-shelf MCP servers and these integrations to quickly build powerful AI applications, while maintaining security and structure around the model's access to data.

Conclusion

Anthropic's Model Context Protocol is a significant step toward standardizing how AI models interact with the world around them. In this guide, we covered what MCP is (an open client-server protocol for AI context), how it differs from a traditional API spec approach, the wide range of language support, and the vibrant ecosystem of servers and clients. We also discussed how MCP is not limited to local desktop use - it's making its way into cloud and enterprise scenarios - and how it emphasizes security and user-specific access control through OAuth and server-side enforcement.

For developers, MCP offers a clear pathway to build AI-enhanced applications: implement or deploy the connectors you need, and use a supportive framework to let your model interact with them. The Python code examples provided a taste of integrating MCP in practice, but you can find many more examples and templates in the official documentation and community repositories. As always, prioritize official docs and best practices (especially on security configurations) when building with MCP.

With MCP maturing, we can expect even more tools, languages, and platforms to join the ecosystem. By following this guide and leveraging the official SDKs, you'll be well-equipped to develop your own MCP servers or integrate existing ones, enabling richer and more context-aware AI experiences in your projects.

Sources

- "Introduction - Model Context Protocol,"

- "Introducing the Model Context Protocol \ Anthropic,"

- "MCP: Hype or Game-Changer for AI Integrations?,"

- "GitHub - modelcontextprotocol/servers: Model Context Protocol Servers,"

- "LangChain - Changelog | MCP Adapters for LangChain and LangGraph,"

- "GitHub - lastmile-ai/openai-agents-mcp: An MCP extension package for OpenAI Agents SDK,"

- "Introducing Model Context Protocol (MCP) in Copilot Studio: Simplified Integration with AI Apps and Agents | Microsoft Copilot Blog,"

- "Build and deploy Remote Model Context Protocol (MCP) servers to Cloudflare,"

- "GitHub - langchain-ai/langchain-mcp-adapters,"

- "Authorization · Cloudflare Agents docs,"

- "What's New - Model Context Protocol,"

- "modelcontextprotocol/kotlin-sdk - GitHub,"

- "mark3labs/mcp-go - GitHub,"

- "MCP Ruby Server Skeleton - GitHub,"

- "cloudwalk/hermes-mcp: Elixir Model Context Protocol (MCP) SDK,"

- "Everything You Need to Know About the Model Context Protocol (MCP) from Anthropic | by allglenn | Mar, 2025 | Stackademic,"