Building applications with Large Language Models (LLMs) presents unique challenges, particularly in orchestrating complex tasks and managing memory. Developers often need frameworks to simplify these processes, allowing for more efficient and effective AI agent development. LangChain and LangGraph are popular frameworks that have gained attention for their capabilities in this area.

LangChain specializes in creating multi-step language processing workflows. It's a tool that helps in tasks like content generation and language translation by chaining together different language model operations.

On the other hand, LangGraph offers a flexible framework for building stateful applications. It handles complex scenarios involving multiple agents and facilitates human-agent collaboration with features like built-in statefulness, Human-in-the-loop Workflows and first-class streaming support.

Memory management is a crucial aspect when working with LLM-based agents. An AI agent's ability to retain and utilize information from previous interactions is essential for generating coherent and contextually appropriate responses. However, developers may encounter challenges such as limitations in context windows and maintaining consistent memory over prolonged interactions or tasks.

This article will explore the differences between LangChain and LangGraph, with a focus on how each framework addresses the challenges of memory management in AI agents. There will be practical examples of building agents using LangGraph. Additionally, we'll discuss recent advancements that have enhanced agent memory, offering insights into how these developments can impact your AI application.

Summary of key LangChain and LangGraph concepts

| Concept | Description |

|---|---|

Overview of LangChain vs. LangGraph | LangChain is a flexible framework for simple, linear LLM workflows, excelling in task chaining and modularity. LangGraph handles complex workflows through graph-based orchestration, persistent state management, and multi-agent coordination, making it better suited for advanced applications. |

LangChain features | Includes task chaining to link multiple LLM tasks, modular components for flexibility, integration with external data sources, and strong community support to enhance functionality. |

LangGraph features | Supports graph-based workflows for dynamic decision-making, cyclical graphs for iterative processes, persistent state management, and integration with LangChain and LangSmith for monitoring and optimization. |

Key concepts used in LangGraph | Cyclical Graphs: Enable loops and repeated interactions, essential for tasks requiring multiple iterations or conditional branching based on dynamic inputs. Nodes and Edges:Nodes represent individual workflow components (e.g., LLMs, agents, specific functions), while edges define connections and the flow of data and control between nodes. State Management:Persistently maintain state across nodes, allowing applications to pause and resume tasks without losing context, which is crucial for long-running processes or when human intervention is required. Integration with LangChain and LangSmith:Builds upon LangChain's task chaining and integrates with LangSmith for comprehensive monitoring and optimization of workflows. |

Comparison of LangChain vs. LangGraph | Conversational Control: _ LangChain: Suited for linear or simple task chains where each step follows directly from the previous one. _ LangGraph: Ideal for complex conversational flows requiring dynamic decision-making and multiple branching paths. Orchestration:_ LangChain: Focuses on chaining tasks in a sequence, suitable for straightforward workflows. _ LangGraph: Also focuses on chaining tasks but with added capabilities for handling complex workflows. State Management:_ LangChain: Provides basic memory management through context windows, which may struggle with maintaining state over long or complex interactions. _ LangGraph: Offers advanced state management with persistent states across nodes. Use Case Fit:_ LangChain: Suitable for content generation, customer support chatbots, and language translation. _ LangGraph: Better for applications requiring detailed workflow management like social network analysis, fraud detection, and multi-agent coordination. |

Migrating from LangChain agents to LangGraph | As projects increase in complexity, LangChain's straightforward task chaining may become limiting. Migrating to LangGraph offers enhanced control and flexibility for managing intricate workflows, especially those involving multiple agents, conditional logic, or cyclical processes. |

LangGraph Library features | Cycles and Branching: Implement loops and conditional logic within workflows, allowing agents to handle dynamic and complex tasks by defining task dependencies through nodes and edges. Persistent State Management:Save and restore state at any workflow point using tools like SqliteSaver, ensuring workflows can pause and resume without losing context. Human-in-the-loop Workflows:Incorporate human intervention for approving or modifying agent actions, enhancing outcome quality and reliability by allowing critical decisions to be reviewed by humans. |

Building single and multi-agent workflows | Single-Agent Workflow: Demonstrates core concepts such as state management and graph-based workflows by structuring tasks as nodes and transitions as edges, providing a clear and flexible architecture. Multi-Agent Systems:Supports workflows where different agents handle specific tasks. For instance, a router agent can direct queries to appropriate expert agents based on user input, enabling specialized and accurate responses. |

Limitations of LangGraph | Includes setup complexity, potential agent looping issues, and resource-heavy workflows. These require careful design to prevent inefficiencies and manage scalability. |

How memory will evolve in AI Applications | As AI technologies advance, memory systems become increasingly vital for creating context-aware and personalized experiences. Long-term memory allows AI agents to remember past interactions and user preferences, enhancing personalization and context relevance. Integrations with tools like Zep will continue to improve memory management by providing efficient retrieval, privacy safeguards, and scalable storage solutions. |

Overview of LangChain vs. LangGraph

When building applications with Large Language Models (LLMs), choosing the right framework can significantly impact your project's efficiency and scalability. While both LangChain and LangGraph aim to simplify the development of AI agents, they cater to different needs and complexities.

LangChain: simplifying LLM interactions

LangChain is an open-source framework designed to help developers create applications using LLMs. Its primary strength lies in building simple chains of language model interactions. Think of LangChain as a toolkit that allows you to link various language models and tasks together seamlessly. Whether you're working on a chatbot, content generator, or data processing workflow, LangChain provides the flexibility and modularity needed to compose multiple models and manage prompts effectively.

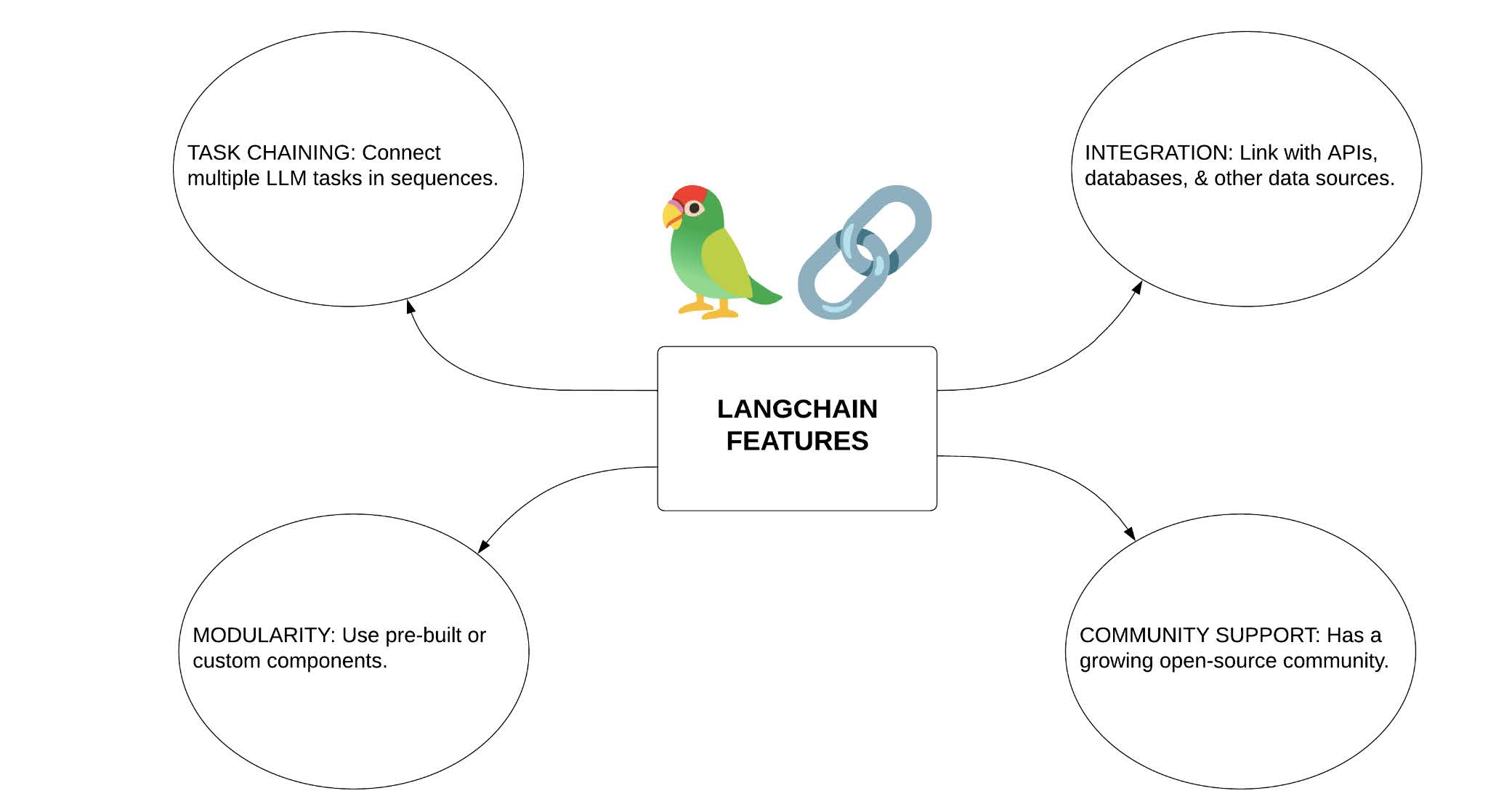

LangChain features

Key features of LangChain include:

- Task chaining: Easily connect multiple language model tasks in a sequence.

- Modularity: Use pre-built components or create custom ones to fit your specific needs.

- Integration: Connect with external data sources like APIs, databases, and files to enrich your applications.

- Community support: Being open-source, LangChain has a vibrant community that contributes modules and extensions, enhancing its capabilities.

LangGraph: orchestrating complex workflows

LangGraph is built to handle more sophisticated and intricate workflows. While LangChain excels in straightforward task chaining, LangGraph takes it a step further by offering a graph-based approach to orchestrate complex conversational flows and data pipelines. This makes LangGraph particularly suitable for projects that require managing multiple agents, conditional logic, and stateful interactions.

Key features of LangGraph include:

- Graph-based workflows: Visualize and manage task dependencies through nodes and edges, making it easier to handle complex interactions.

- Cyclical graphs: Support for cyclical workflows allows for dynamic decision-making and iterative processes within your applications.

- State management: Maintain persistent states across different nodes, enabling functionalities like pausing, resuming, and incorporating human-in-the-loop interactions.

- Integration with LangChain and LangSmith: LangGraph extends the capabilities of LangChain by seamlessly integrating with it, as well as with LangSmith for monitoring and optimization.

Key Concepts of LangGraph

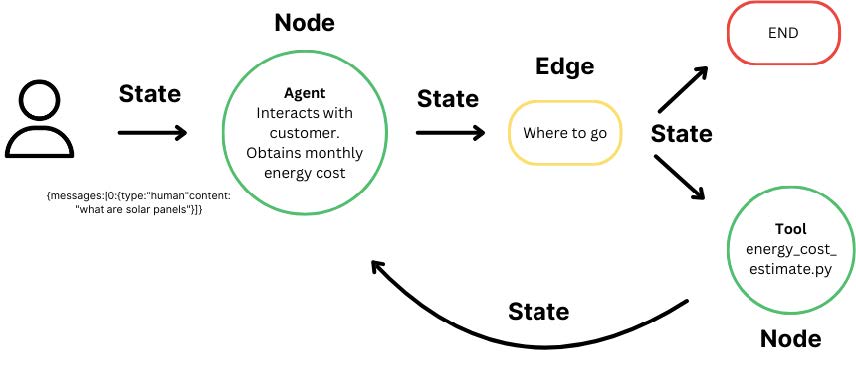

This Flow represents how the data flows through the LangGraph components internally to get to a decision (source)

Understanding LangGraph's core concepts is essential to leveraging its full potential:

Cyclical graphs

Unlike linear workflows, cyclical graphs allow for loops and repeated interactions. This is crucial for managing tasks that require multiple iterations or conditional branching based on dynamic inputs.

Nodes and edges

The nodes represent the individual components of your workflow, such as LLMs, agents, or specific functions. Each node performs a distinct part of the overall task.

The edges define the connections between nodes, determining the flow of data and control. They can be conditional, directing the workflow based on certain criteria, or basic, following a straightforward path.

State management

LangGraph maintains a persistent state across different nodes, which means your application can pause and resume tasks without losing context. This is particularly useful for long-running processes or when human intervention is required at certain points.

Integration with LangChain and LangSmith

LangGraph doesn't work in isolation. It builds upon LangChain's capabilities, allowing you to incorporate complex workflows while still utilizing LangChain's modular task chaining. Additionally, integration with LangSmith provides tools for monitoring and optimizing your AI models, ensuring your applications run smoothly and efficiently.

Comparison of LangChain vs. LangGraph

LangChain has been around longer, earning a reputation for its versatility and strong community support. It's favored by developers who need a flexible framework to build a wide range of LLM applications without the overhead of managing complex workflows.

LangGraph, being newer, addresses the growing need to manage more sophisticated interactions and workflows. It attracts users who require a higher level of control and visibility over their processes, especially in scenarios where multiple agents and conditional logic are involved.

| Key Area | LangChain | LangGraph |

|---|---|---|

Conversational Control | Suited for linear or simple task chains where each step follows directly from the previous one. | Ideal for complex conversational flows that require dynamic decision-making and multiple branching paths. |

Orchestration | Focuses on chaining tasks in a sequence, making it straightforward for straightforward workflows. | Uses a graph-based approach to orchestrate complex workflows with dependencies, cycles, and branching logic. |

State Management | Provides basic memory management through context windows but may struggle with maintaining state over long or complex interactions. | Offers robust state management, enabling persistent states, pausing/resuming workflows, and supporting long-term memory. |

Use Case Fit | Suitable for projects like content generation, customer support chatbots, and language translation where the workflow is relatively straightforward. | Better suited for applications requiring detailed workflow management, such as social network analysis, fraud detection, and multi-agent coordination. |

Migrating from LangChain Agents to LangGraph

As your projects grow in complexity, LangChain's straightforward task chaining becomes limiting. Transitioning to LangGraph can offer more control and flexibility for managing intricate workflows. Here's why and how you might consider making the switch.

Complex workflows

If your application involves multiple agents, conditional logic, or cyclical processes, LangGraph's graph-based approach can more effectively handle them.

State management and memory

For projects that require maintaining context across sessions or the ability to pause and resume tasks, LangGraph provides better state and memory management than LangChain. This make sures that AI agents can retain relevant information, for better continuity and responsiveness during complex user interactions.

Visualization and control

LangGraph's visual workflow design makes it easier to understand and manage complex task dependencies, enhancing maintainability. LangGraph provides more granular control over agent actions and interactions.

Scalability issues

When LangChain starts to show limitations in handling large-scale or highly interactive workflows.

Integration requirements

When integrating with other tools like LangSmith for monitoring and optimization becomes essential.

Migration example - converting a LangChain agent to LangGraph

Let's walk through a simple example of migrating a LangChain-based chatbot to a LangGraph-based implementation. This will illustrate the practical steps and highlight the benefits of using LangGraph.

LangChain chatbot

[object Object] langchain [object Object] LLMChain

[object Object] langchain.llms [object Object] OpenAI

[object Object]

llm = OpenAI(api_key=[object Object])

[object Object]

chain = LLMChain(llm=llm, prompt=[object Object])

[object Object]

response = chain.run()

[object Object](response)

LangGraph chatbot

[object Object] langgraph.graph [object Object] StateGraph

[object Object] langgraph.graph.message [object Object] add_messages

[object Object] langchain.llms [object Object] OpenAI

[object Object] typing [object Object] Annotated

[object Object] typing_extensions [object Object] TypedDict

[object Object] langchain_core.messages [object Object] HumanMessage

[object Object] [object Object]([object Object]):

messages: Annotated[[object Object], add_messages]

[object Object]

graph_builder = StateGraph(State)

[object Object]

llm = OpenAI(api_key=[object Object]) [object Object]

[object Object]

[object Object] [object Object]([object Object]):

[object Object]

user_message = state[[object Object]][-[object Object]].content

response = llm.invoke(user_message)

[object Object] {[object Object]: [HumanMessage(content=response)]}

[object Object]

graph_builder.add_node([object Object], chatbot)

[object Object]

graph_builder.set_entry_point([object Object])

graph_builder.set_finish_point([object Object])

[object Object]

graph = graph_builder.[object Object]()

[object Object]

[object Object] [object Object]:

user_input = [object Object]([object Object])

[object Object] user_input.lower() [object Object] [[object Object], [object Object], [object Object]]:

[object Object]([object Object])

[object Object]

[object Object] event [object Object] graph.stream({[object Object]: ([object Object], user_input)}):

[object Object] value [object Object] event.values():

[object Object]([object Object], value[[object Object]][-[object Object]][[object Object]])

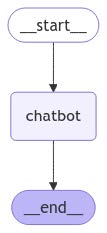

Complied graph plot for the above LangGraph execution from graph_builder.compile() line

Explanations:

- State definition: in LangGraph, we define a State that keeps track of the conversation messages. This persistent state allows the chatbot to remember previous interactions.

- Graph initialization: we create a StateGraph and add a node named "chatbot" which handles the interaction logic using the language model.

- Node function: the chatbot function takes the current state, processes the latest user message, and generates a response using the LLM.

- Setting entry and finish points: we designate the "chatbot" node as both the entry and finish point, meaning all interactions start and end with this node.

- Interactive loop: the loop allows for continuous user interaction, streaming responses from the graph and maintaining the conversation state.

While LangGraph requires more lines of code for simple tasks, this added complexity enables it to handle more advanced workflows. Its graph-based design allows for branching, looping, and conditional logic, making it ideal for complex, real-world scenarios.

LangGraph features

LangGraph offers a set of features that make it easier to build and manage complex workflows with LLMs. In this section, we'll see into some of its key capabilities, including cycles, branching, persistent state management, and human-in-the-loop workflows.

Cycles and branching

[object Object] langgraph.graph [object Object] StateGraph, MessagesState, START, END

[object Object]

[object Object] [object Object]([object Object]):

messages: Annotated[[object Object], add_messages]

[object Object]

[object Object] [object Object]([object Object]):

input_msg = state[[object Object]][-[object Object]][[object Object]]

response = [object Object]

[object Object] {[object Object]: [response]}

[object Object] [object Object]([object Object]):

input_msg = state[[object Object]][-[object Object]][[object Object]]

[object Object] [object Object](input_msg) > [object Object]:

[object Object] {[object Object]: [object Object]}

[object Object] {[object Object]: [object Object]}

[object Object] [object Object]([object Object]):

input_msg = state[[object Object]][-[object Object]][[object Object]]

processed = input_msg.upper()

[object Object] {[object Object]: [processed]}

[object Object]

graph_builder = StateGraph(MessagesState)

[object Object]

graph_builder.add_node([object Object], node1)

graph_builder.add_node([object Object], node2)

graph_builder.add_node([object Object], tool)

[object Object]

graph_builder.add_edge(START, [object Object])

graph_builder.add_edge([object Object], [object Object])

graph_builder.add_conditional_edges(

source=[object Object],

path=[object Object] state: [object Object] [object Object] [object Object](state[[object Object]][-[object Object]][[object Object]]) > [object Object] [object Object] [object Object],

path_map={

[object Object]: [object Object],

[object Object]: [object Object]

}

)

graph_builder.add_edge([object Object], END)

[object Object]

graph = graph_builder.[object Object]()

[object Object]

inputs = {[object Object]: [{[object Object]: [object Object], [object Object]: [object Object]}]}

[object Object] output [object Object] graph.stream(inputs):

[object Object] key, value [object Object] output.items():

[object Object]([object Object])

LangGraph allows you to implement loops and conditional logic within your workflows, enabling agents to handle more dynamic and complex tasks. By representing each agent or function as a node and defining the flow between them with edges, you can create workflows that branch based on specific conditions or repeat certain steps as needed.

For example, consider a workflow where an agent must process user input, perform a series of checks, and decide whether to continue processing or end the task based on the input length. The code below demonstrates the same.

Persistent state management

Managing the state across different nodes is crucial for maintaining context and ensuring the workflow can resume seamlessly after interruptions. LangGraph handles this through its persistent state management, which allows you to save and restore the state at any point in the workflow. Here's how you can implement persistent state management:

[object Object] langgraph.graph [object Object] StateGraph, MessagesState

[object Object] langgraph.checkpoint.sqlite [object Object] SqliteSaver

[object Object]

memory = SqliteSaver.from_conn_string([object Object])

[object Object]

graph = graph_builder.[object Object](checkpointer=memory)

[object Object]

thread_config = {[object Object]: {[object Object]: [object Object]}}

[object Object] event [object Object] graph.stream(inputs, thread_config, stream_mode=[object Object]):

[object Object] key, value [object Object] event.items():

[object Object]([object Object])

[object Object]

Let's take another example: follow-up question handling

A chatbot needs to handle a conversation where users can ask follow-up questions, and the bot references past messages stored in a persistent state.

[object Object] langgraph.graph [object Object] StateGraph, MessagesState, START, END

[object Object] langgraph.checkpoint.sqlite [object Object] SqliteSaver

[object Object] langchain_core.messages [object Object] HumanMessage

[object Object] typing_extensions [object Object] TypedDict

[object Object]

[object Object] [object Object]([object Object]):

messages: [object Object]

[object Object]

memory_saver = SqliteSaver.from_conn_string([object Object])

[object Object]

[object Object] [object Object]([object Object]):

[object Object]

history = [object Object].join([msg[[object Object]] [object Object] msg [object Object] state[[object Object]]])

[object Object]

user_message = state[[object Object]][-[object Object]][[object Object]]

[object Object]

response = [object Object]

[object Object] {[object Object]: [{[object Object]: [object Object], [object Object]: response}]}

[object Object]

graph_builder = StateGraph(MessagesState)

graph_builder.add_node([object Object], chatbot_with_context)

graph_builder.add_edge(START, [object Object])

graph_builder.add_edge([object Object], END)

graph = graph_builder.[object Object](checkpointer=memory_saver)

[object Object]

inputs = {

[object Object]: [

{[object Object]: [object Object], [object Object]: [object Object]},

{[object Object]: [object Object], [object Object]: [object Object]}

]

}

[object Object] event [object Object] graph.stream(inputs, stream_mode=[object Object]):

[object Object] key, value [object Object] event.items():

[object Object]([object Object])

Below is an explanation of the code:

- Persistent State Management:

- SqliteSaver ensures the state (conversation history) is saved after every interaction.

- If the graph is interrupted, the state can be restored seamlessly.

- Conversation History:

- The chatbot retrieves past user messages from the state["messages"] object and incorporates them into the response.

- Follow-Up Handling:

- Each user message is stored, enabling the bot to refer to previous interactions for generating context-aware replies.

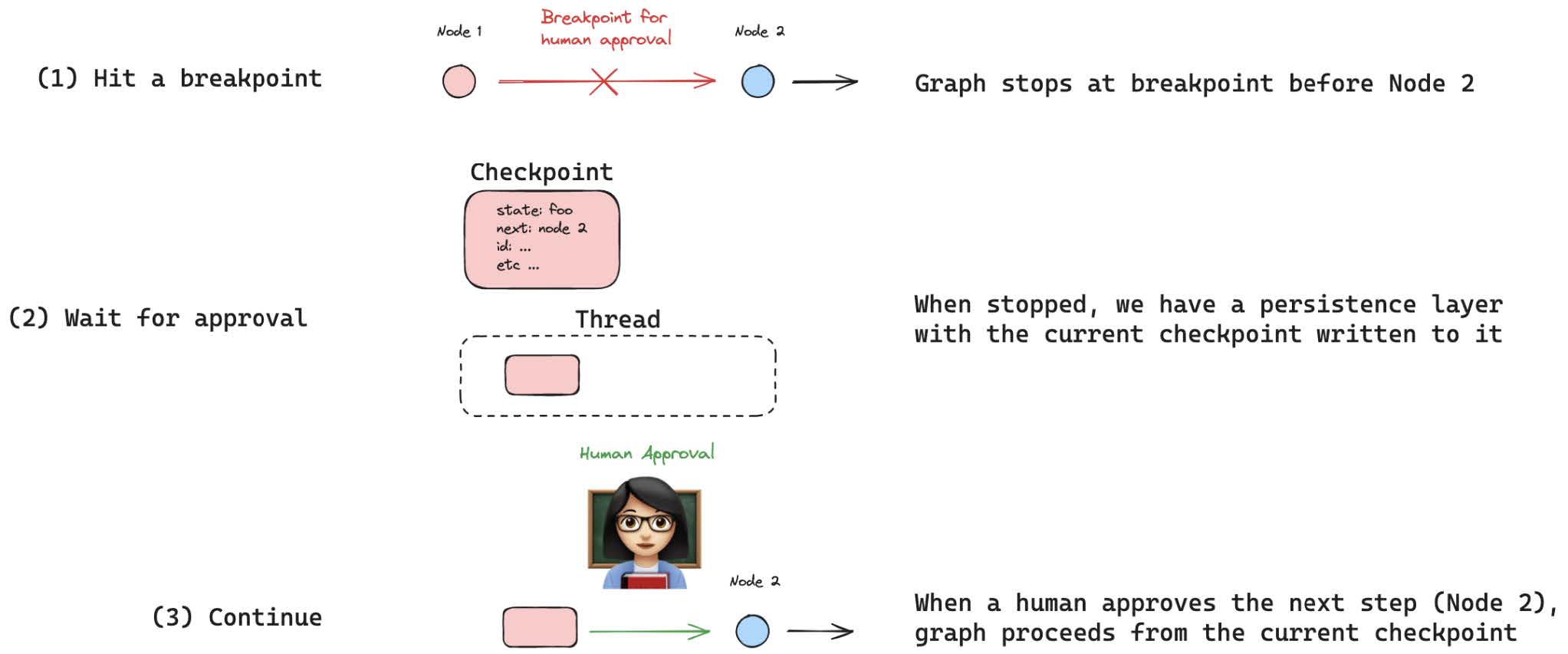

Human-in-the-loop workflows

Incorporating human intervention into automated workflows can enhance the quality and accuracy of the outcomes. LangGraph supports human-in-the-loop (HIT) functionality, allowing humans to approve or modify actions planned by the agent before they are executed.

Collecting feedback

You can integrate human nodes within LangGraph to gather feedback and refine workflow outcomes. This ensures that critical decisions are reviewed by a human, adding an extra layer of reliability.

Editor node implementation

Using LangGraph, you can create nodes that involve humans in the decision-making process. For instance, an editor node can refine responses based on human feedback, improving the overall user experience.

Agentic human interaction

LangGraph also supports more dynamic HIT systems, where tools like HumanInputRun enable multiple interactions and refinements throughout the workflow. This is particularly useful for complex tasks that require iterative improvements.

Illustration of LangGraph's human-in-the-loop functionality with checkpoints and approval flow. (source)

Code tutorial: adding human-in-the-loop nodes

Here's an example of how to add human-in-the-loop functionality to your workflow:

[object Object] langgraph.graph [object Object] StateGraph, MessagesState, START, END

[object Object] langgraph.graph.message [object Object] HumanMessage, SystemMessage

[object Object]

[object Object] [object Object]([object Object]):

messages: Annotated[[object Object], add_messages]

[object Object]

[object Object] [object Object]([object Object]):

user_input = state[[object Object]][-[object Object]][[object Object]]

response = [object Object]

[object Object] {[object Object]: [response]}

[object Object] [object Object]([object Object]):

[object Object]

approval = [object Object]([object Object])

[object Object] approval.lower() == [object Object]:

[object Object] {[object Object]: [object Object]}

[object Object] {[object Object]: [object Object]}

[object Object] [object Object]([object Object]):

[object Object] {[object Object]: [[object Object]]}

[object Object]

graph_builder = StateGraph(MessagesState)

[object Object]

graph_builder.add_node([object Object], node1)

graph_builder.add_node([object Object], human_review)

graph_builder.add_node([object Object], node2)

[object Object]

graph_builder.add_edge(START, [object Object])

graph_builder.add_edge([object Object], [object Object])

graph_builder.add_conditional_edges(

source=[object Object],

path=[object Object] state: [object Object] [object Object] [object Object] [object Object] state[[object Object]][-[object Object]][[object Object]].lower() [object Object] [object Object],

path_map={

[object Object]: [object Object],

[object Object]: [object Object]

}

)

graph_builder.add_edge([object Object], END)

[object Object]

graph = graph_builder.[object Object]()

[object Object]

inputs = {[object Object]: [{[object Object]: [object Object], [object Object]: [object Object]}]}

[object Object] output [object Object] graph.stream(inputs):

[object Object] key, value [object Object] output.items():

[object Object]([object Object])

In this example:

- Node-1 processes the initial user input.

- Human-Review pauses the workflow to ask for human approval.

- Depending on the response, the workflow either proceeds to Node-2 or ends.

This setup ensures that critical actions are vetted by a human, enhancing the reliability of the workflow.

Building single and multi-agent workflows

Building a single-agent workflow in LangGraph is straightforward and demonstrates the core concepts of the framework, such as state management and graph-based workflows. By using a graph-based design, LangGraph structures tasks as nodes and transitions as edges, providing a clear and flexible workflow architecture. Additionally, it highlights the real-time execution flow, where state updates seamlessly propagate through the graph.

Here's a step-by-step guide to building a basic chatbot.

Define the State

[object Object] typing [object Object] Annotated, TypedDict

[object Object] langgraph.graph [object Object] StateGraph, MessagesState, START, END

[object Object] langgraph.graph.message [object Object] add_messages

[object Object] [object Object]([object Object]):

messages: Annotated[[object Object], add_messages]

The state structure holds the conversation messages, maintaining context throughout the interaction.

Create Node Functions

[object Object] [object Object]([object Object]):

user_input = state[[object Object]][-[object Object]][[object Object]]

response = [object Object]

[object Object] {[object Object]: [response]}

Define how the agent processes incoming messages. In this example, the agent simply echoes the user's input.

Build the Graph

Construct the workflow by adding nodes and defining the flow from start to end.

graph_builder = StateGraph(MessagesState)

graph_builder.add_node([object Object], receive_message)

graph_builder.add_edge(START, [object Object])

graph_builder.add_edge([object Object], END)

graph = graph_builder.[object Object]()`

Run the Chatbot

Initiate the chatbot with a user message and stream the responses.

[object Object] langchain_core.messages [object Object] HumanMessage

inputs = {[object Object]: [HumanMessage(content=[object Object])]}

[object Object] output [object Object] graph.stream(inputs):

[object Object] key, value [object Object] output.items():

[object Object]([object Object])

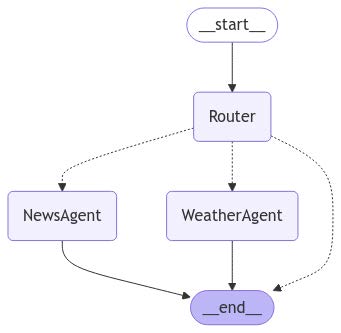

Multi-Agent Systems

For more complex applications, LangGraph supports multi-agent workflows where different agents handle specific tasks. Here's how to build a multi-agent system with a router agent directing queries to the appropriate expert agents.

Define the State

Similar to the single-agent workflow, the state holds conversation messages.

[object Object] [object Object]([object Object]):

messages: Annotated[[object Object], add_messages]

Create Agent Functions

Define agents for routing, weather, and news. The router directs queries to the appropriate agent based on the user's input.

[object Object] [object Object]([object Object]):

user_input = state[[object Object]][-[object Object]][[object Object]]

[object Object] [object Object] [object Object] user_input.lower():

[object Object] {[object Object]: [object Object]}

[object Object] [object Object] [object Object] user_input.lower():

[object Object] {[object Object]: [object Object]}

[object Object] {[object Object]: [object Object]}

[object Object] [object Object]([object Object]):

[object Object] {[object Object]: [[object Object]]}

[object Object] [object Object]([object Object]):

[object Object] {[object Object]: [[object Object]]}

Build the Graph

Set up the workflow by adding nodes and defining conditional paths based on the router's decisions.

graph_builder = StateGraph(MessagesState)

graph_builder.add_node([object Object], router_agent)

graph_builder.add_node([object Object], weather_agent)

graph_builder.add_node([object Object], news_agent)

graph_builder.add_edge(START, [object Object])

graph_builder.add_conditional_edges(

source=[object Object],

path=[object Object] state: [object Object] [object Object] [object Object] [object Object] state[[object Object]][-[object Object]][[object Object]].lower() [object Object] ([object Object] [object Object] [object Object] [object Object] state[[object Object]][-[object Object]][[object Object]].lower() [object Object] [object Object]),

path_map={

[object Object]: [object Object],

[object Object]: [object Object],

[object Object]: [object Object]

}

)

graph_builder.add_edge([object Object], END)

graph_builder.add_edge([object Object], END)

graph = graph_builder.[object Object]()

Run the multi-agent workflow

Provide user input and observe how the router directs the query to the appropriate agent.

inputs = {[object Object]: [HumanMessage(content=[object Object])]}

[object Object] output [object Object] graph.stream(inputs):

[object Object] key, value [object Object] output.items():

[object Object]([object Object])

Output:

Complied Graph Plot for the above LangGraph execution

This setup allows the router agent to direct user queries to the appropriate expert agent based on the input, enabling more specialized and accurate responses.

Persistence and state management

In any AI workflow, managing and retaining context is essential for seamless interactions. LangGraph addresses this need with robust persistence and state management capabilities, ensuring workflows can maintain context and recover gracefully from interruptions.

Short-term memory

LangGraph efficiently manages short-term memory within conversations using state checkpoints. This approach is ideal for scenarios like basic customer support, where maintaining the context of the current interaction is sufficient.

Example - basic customer support

[object Object] langgraph.graph [object Object] StateGraph, MessagesState, START, END

[object Object] langchain_core.messages [object Object] HumanMessage

[object Object] [object Object]([object Object]):

messages: Annotated[[object Object], add_messages]

[object Object] [object Object]([object Object]):

user_query = state[[object Object]][-[object Object]][[object Object]]

response = [object Object]

[object Object] {[object Object]: [response]}

graph_builder = StateGraph(MessagesState)

graph_builder.add_node([object Object], support_agent)

graph_builder.add_edge(START, [object Object])

graph_builder.add_edge([object Object], END)

graph = graph_builder.[object Object]()

inputs = {[object Object]: [HumanMessage(content=[object Object])]}

[object Object] output [object Object] graph.stream(inputs):

[object Object] key, value [object Object] output.items():

[object Object]([object Object])

Limitations of short-term memory

As conversations grow, so does the chat history. This can lead to performance issues due to the increasing size of the context window. Managing this growth is essential to maintain efficiency.

Growing chat history

Long conversations result in a large accumulation of messages, which can slow down processing and increase costs. To address this, developers need strategies to manage and optimize chat history.

Need for pruning and selecting relevant history

Implementing pruning techniques, such as summarizing past messages or discarding less relevant ones, helps keep the chat history manageable. Maintaining essential context while reducing the overall state size ensures both performance and relevance.

Long-term memory in LangGraph

Recent updates in LangGraph introduce capabilities for long-term memory across multiple threads. This allows AI agents to retain information over extended periods, enhancing their ability to provide consistent and context-aware responses.

Imagine building a personalized customer support chatbot. The chatbot needs to remember user preferences and past interactions across sessions, such as previously reported issues, preferred communication styles, or product preferences.

Challenges of long-term memory

Implementing long-term memory in AI agents presents several challenges:

- Relevance Maintenance: Over time, the volume of stored information can become overwhelming, making it difficult to ensure that only pertinent data is retained. Without effective management, the agent might struggle to differentiate between essential and irrelevant information.

- Data Freshness: Information can become outdated or less relevant as contexts change. Keeping the memory updated requires mechanisms to periodically review and refresh stored data.

- Resource Optimization: Storing extensive histories can lead to increased resource consumption, affecting both performance and cost. Efficient memory management strategies are necessary to balance the depth of memory with resource usage.

How Zep addresses long-term memory challenges

Zep integrates seamlessly with LangGraph to tackle these challenges, giving a robust solution for managing long-term memory in AI applications. Here's how it works:

- Persistent storage: It comes with persistent storage solutions that allow AI agents to save and retrieve information across different sessions. This persistence ensures that the agent can maintain context even after interruptions or restarts.

- Efficient retrieval: With Zep, retrieving relevant facts becomes very efficient. The system can quickly access pertinent information without processing the entire history, thereby optimizing performance.

- Privacy and security: It emphasizes data privacy, ensuring that user information is handled securely. This focus is crucial for applications that deal with sensitive or personal data.

- Framework: Zep's framework-agnostic approach means it can integrate with various AI frameworks, including LangGraph, without requiring significant changes to existing workflows.

Code example of Zep integration with LangGraph

Integrating Zep with LangGraph enables persistent user memory, allowing agents to recall information across sessions. Below is an example that demonstrates this integration:

[object Object] langgraph.graph [object Object] StateGraph, MessagesState, START, END

[object Object] langgraph.checkpoint.memory [object Object] MemorySaver

[object Object] langgraph.prebuilt [object Object] ToolNode

[object Object] langchain_core.messages [object Object] HumanMessage

[object Object] zep_cloud.client [object Object] AsyncZep

[object Object] zep_cloud [object Object] Message

[object Object] asyncio

[object Object]

zep = AsyncZep(api_key=os.environ.get([object Object]))

[object Object]

[object Object] [object Object]([object Object]):

messages: Annotated[[object Object], add_messages]

user_name: [object Object]

thread_id: [object Object]

[object Object]

[object Object]

[object Object] [object Object] [object Object]([object Object]):

[object Object] [object Object] zep.graph.search(

user_id=state[[object Object]],

query=query,

limit=limit,

scope=[object Object]

)

tools = [search_facts]

tool_node = ToolNode(tools)

[object Object]

[object Object] [object Object] [object Object]([object Object]):

context = [object Object] zep.thread.get_user_context(state[[object Object]], mode=[object Object])

facts_string = context [object Object] context [object Object] [object Object]

response = [object Object]

[object Object] {[object Object]: [response]}

[object Object]

graph_builder = StateGraph(State)

graph_builder.add_node([object Object], chatbot)

graph_builder.add_node([object Object], tool_node)

graph_builder.add_edge(START, [object Object])

graph_builder.add_conditional_edges(

source=[object Object],

path=[object Object] state: [object Object] [object Object] [object Object] [object Object] state[[object Object]][-[object Object]][[object Object]].lower() [object Object] [object Object],

path_map={

[object Object]: [object Object],

[object Object]: [object Object]

}

)

graph_builder.add_edge([object Object], END)

graph = graph_builder.[object Object]()

[object Object]

[object Object] [object Object] [object Object]():

user_name = [object Object] + uuid.uuid4().[object Object][:[object Object]]

thread_id = uuid.uuid4().[object Object]

[object Object] zep.user.add(user_id=user_name)

[object Object] zep.thread.create(thread_id=thread_id, user_id=user_name)

inputs = {

[object Object]: [HumanMessage(content=[object Object])],

[object Object]: user_name,

[object Object]: thread_id

}

[object Object] [object Object] output [object Object] graph.stream(inputs):

[object Object] key, value [object Object] output.items():

[object Object]([object Object])

[object Object]([object Object])

[object Object]

asyncio.run(run_with_zep())

Initialization:

- Zep initialization: The AsyncZep client is initialized using an API key, establishing a connection to Zep's memory services.

- State definition: The State class defines the structure of the data maintained by LangGraph, including messages, user identifiers, and session IDs.

Tool definition:

- search_facts function: This asynchronous function interacts with Zep to search for relevant facts based on user queries. It leverages Zep's search_sessions method to retrieve pertinent information from stored sessions.

Chatbot function:

- chatbot function: This function retrieves relevant facts from Zep using the session ID and constructs a response that includes this historical context. If no facts are available, it indicates so.

Graph construction:

- StateGraph initialization: A StateGraph is created with the defined State.

- Node addition: The chatbot and search facts tools are added as nodes to the graph.

- Edge definition: Edges are established to define the workflow. The chatbot node directs the flow to the search facts node if the user input contains the word "search"; otherwise, the workflow ends.

Running the graph:

- run_with_zep function: This asynchronous function sets up a unique user and session, adds them to Zep, and defines the initial user input. It then streams the outputs from the graph, printing responses as generated.

For more detailed insights into how Zep leverages AI knowledge graphs for memory management, refer to Zep's blog on AI Knowledge Graph Memory. This provides an in-depth look at the benefits of using knowledge graphs to enhance AI memory systems.

How memory will evolve in AI Applications

As AI technologies advance, the role of memory systems becomes increasingly vital in creating context-aware and personalized experiences. Here are some key points:

Context and personalization

Long-term memory is essential for providing personalized and contextually relevant interactions. AI agents that can remember past interactions and user preferences can deliver more tailored responses, improving user satisfaction and engagement. This capability is particularly important for applications like virtual assistants, customer support, and personalized learning tools, where understanding the user's history can significantly enhance the interaction quality.

Role of Zep

Zep is significantly improving memory systems within LangGraph. Its focus on privacy ensures that user data is handled securely, addressing one of the critical concerns in AI development.

Looking ahead, developers will need to implement strategies for pruning and selecting relevant history to prevent the accumulation of excessive data, which can hinder performance.

Techniques like summarization and selective retention will be essential to balance memory depth with efficiency. Additionally, robust privacy measures will remain a priority, ensuring that user data is protected while enabling meaningful interactions.

Limitations of LangGraph

While LangGraph offers a robust framework for building complex AI workflows, it is not without its challenges. Understanding these limitations is crucial for developers to make informed decisions about its adoption.

Complexity of setup

One of the primary drawbacks of LangGraph is its complexity during the initial setup. Unlike LangChain, which is relatively straightforward to configure for simple task chains, LangGraph requires a deeper understanding of graph-based architectures and state management.

Developers need to define state structures, nodes, and edges, which can be time-consuming and may present a steep learning curve, especially for those new to graph-oriented frameworks.

Agent looping

A significant concern with LangGraph is the potential for agents to unintentionally create loops. If an agent sends outputs back to itself without proper control mechanisms, it can result in infinite loops.

This not only increases the runtime but also leads to higher token consumption, which can be costly and inefficient. Such scenarios require developers to implement safeguards to prevent agents from getting stuck in repetitive cycles.

Performance impact

Unmanaged cycles and complex workflows can degrade the overall performance of applications built with LangGraph.

Each loop or conditional branch consumes additional resources, potentially slowing down the application and increasing operational costs. Developers must design workflows carefully to avoid unnecessary loops and optimize resource usage, ensuring that the application remains both efficient and cost-effective. In a recent update of the LangGraph Python library, performance enhancements and CI benchmarks were introduced to optimize workflow efficiency and address some of the previously noted limitations, such as resource usage and scalability challenges.

Last thoughts on LangChain vs LangGraph

LangChain and LangGraph each bring unique strengths, making them suitable for different types of AI workflows.

LangChain is ideal for simpler, linear task chains, offering flexibility and modularity. In contrast, LangGraph shines in managing more complex workflows that require advanced orchestration and state management. The ability to handle persistent states and incorporate human-in-the-loop workflows further enhances its capability to build sophisticated AI agents.

Advanced memory management in LangGraph, particularly through integrations with tools like Zep, adds significant value. These memory systems enable AI agents to maintain context and personalize interactions over extended periods, which is crucial for delivering consistent and user-centric experiences.

By understanding the differences between LangChain and LangGraph, developers can choose the framework that best aligns with their project requirements, ensuring the creation of effective, scalable, and intelligent AI solutions.